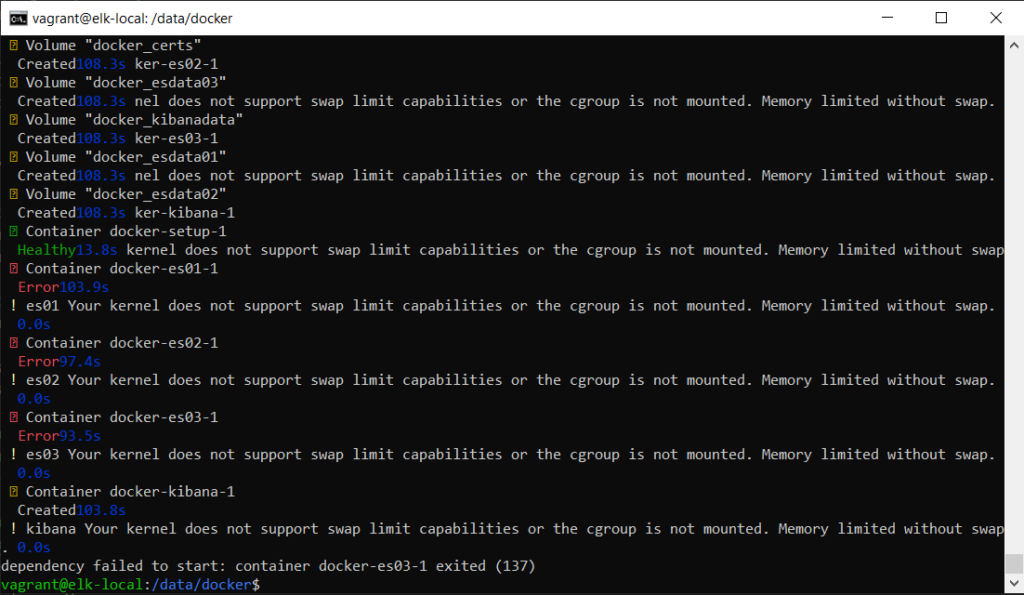

The first error that will be face on get go is the error “kibana Your kernel does not support swap limit capabilities or the cgroup is not mounted. Memory limited without swap” and exiting error “dependency failed to start: container docker-es03-1 exited (137)”.

Searching mentioned error on google or the internet will yield result that advice swap memory and memory limit hit.

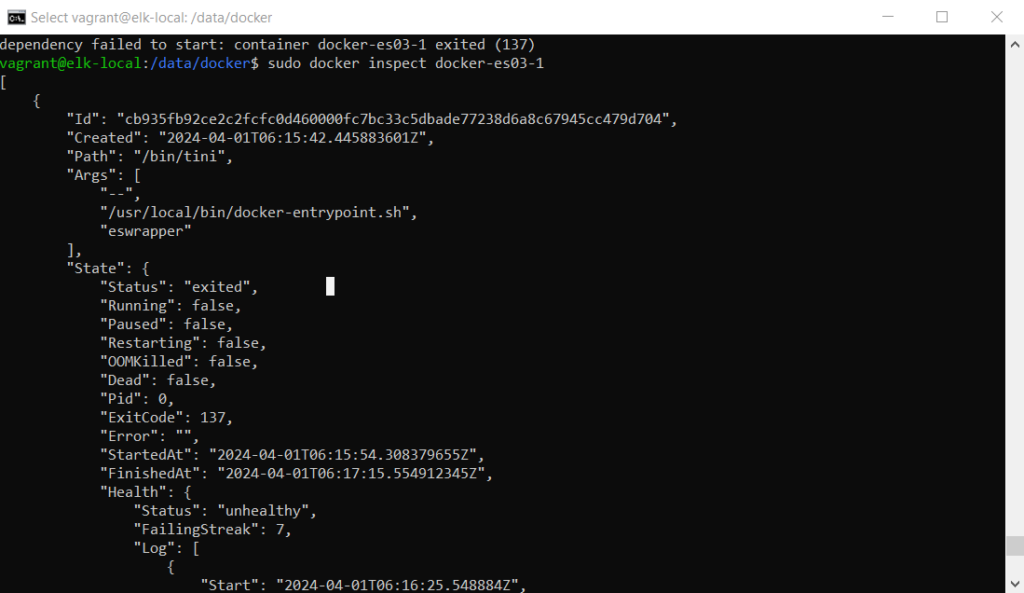

To confirm such error, it is best to make confirmation Out Of Memory error from your system. In this instance, as docker compose were used to spin up the ElasticSearch use the following to confirm the error.

sudo docker inspect docker-es03-1

In the Console output, search for the following error.

The OOMKilled value false indicates that the exit 137 is not due to Out Of Memory. Hence, the solution would be running the following to update the bootloader of Ubuntu 20.04 LTS grub to ensure that the swap are allowed in the OS

sudo sed -i 's/GRUB_CMDLINE_LINUX=""/GRUB_CMDLINE_LINUX="cgroup_enable=memory swapaccount=1"/g' /etc/default/grub

sudo update-grub

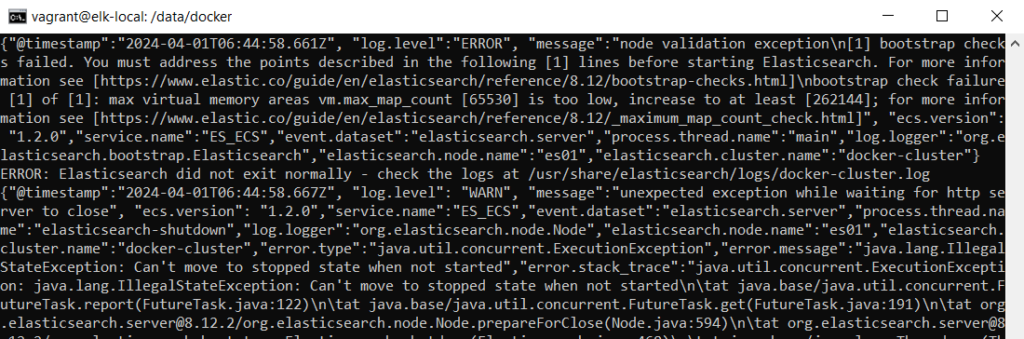

If after rebooting the OS to apply the change for the swap, did not work. Next check the status and log container which es03 is dependent on container es02 and proceed to check on log on container es01. And the OOM Error when inspecting the container is still false.

Expect to find that the similar error is :

“log.level”:”ERROR”, “message”:”node validation exception\n[1] bootstrap checks failed. You must address the points described in the following [1] lines before starting Elasticsearch. For more information see [https://www.elastic.co/guide/en/elasticsearch/reference/8.12/bootstrap-checks.html]\nbootstrap check failure [1] of [1]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]; for more information see [https://www.elastic.co/guide/en/elasticsearch/reference/8.12/_maximum_map_count_check.html]”

The vm.max_map_count error can by rectified by performing the following changes as prescribe in https://www.elastic.co/guide/en/elasticsearch/reference/8.12/bootstrap-checks.html

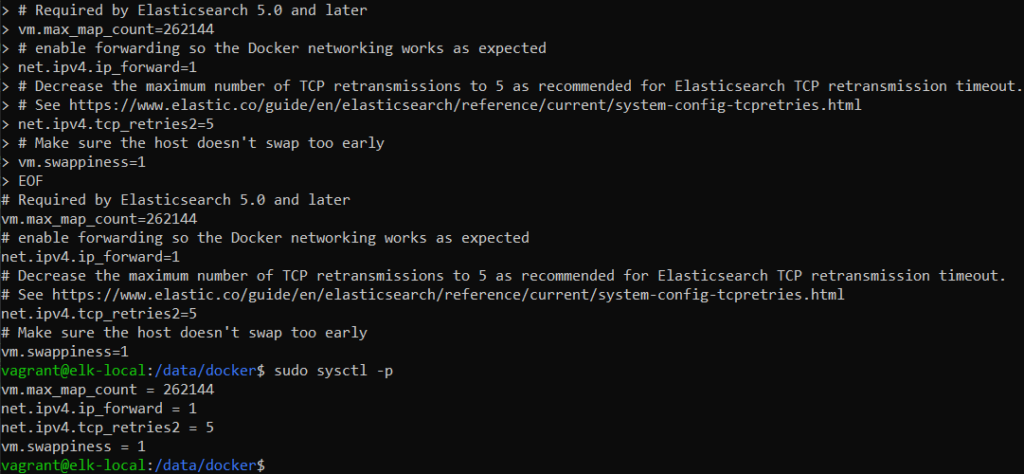

cat <<EOF | sudo tee -a /etc/sysctl.conf

# Required by Elasticsearch 5.0 and later

vm.max_map_count=262144

# enable forwarding so the Docker networking works as expected

net.ipv4.ip_forward=1

# Decrease the maximum number of TCP retransmissions to 5 as recommended for Elasticsearch TCP retransmission timeout.

# See https://www.elastic.co/guide/en/elasticsearch/reference/current/system-config-tcpretries.html

net.ipv4.tcp_retries2=5

# Make sure the host doesn't swap too early

vm.swappiness=1

EOF

sudo sysctl -p

Reboot the host OS.

After rebooting the OS, docker compose up the ElasticSearch stack.

In my setup of 8096GB RAM for the Ubuntu OS, there is something still amiss, as the other Kibana container are exited with error 137 and the inspect does not show the OOM as true.

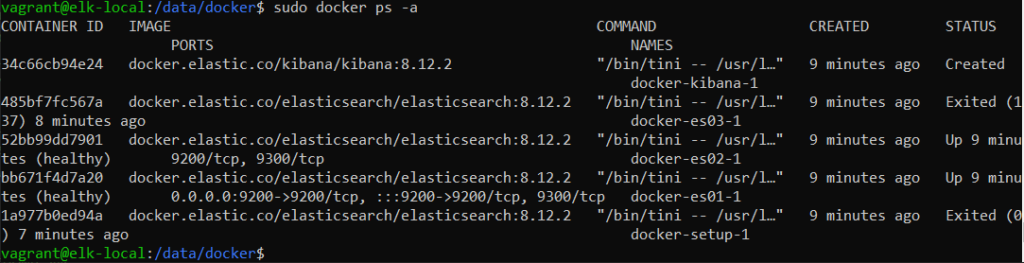

Do further overall check by adding switch -a to the end of docker ps

This could mean the memory allocated to each of the container may not be enough, check the .env use elasticsearch/docs/reference/setup/install/docker/.env at 8.13 · elastic/elasticsearch · GitHub indicated that only 1GB memory is provisioned to each ElasticSearch container. For good result, chance the memory limit MEM_LIMIT at line 26 to 4GB memory.

For a simpler way to setup ElasticSearch in a local environment kindly refer to my previous post on ElasticSearch 8.12 kibana cluster using vagrant and docker compose. instead of 3 for systems with few less than 8GB of RAM do consider running ElasticSearch with a single node instead.