The example given is not an exhaustive nor is it a complete BOM for the MI vacuum robot battery.

Continue reading

The example given is not an exhaustive nor is it a complete BOM for the MI vacuum robot battery.

Continue reading

ARX-8 Laevatein is the final upgrade for the MC in Full Metal Panic. It features lambda drive that the antagonist uses.

Bandai had made a good job for this kit. While alot of the parts are reused, yet some smart designs are used to make the reused part unique.

Continue reading

This is a nice kit that gives a very basic Nu Gundam at scale 1/144. Unfortunately, it does not come with the fin funnels.

Bandai do recommend the HG 1/144 Nu Gundam fin funnels are compatible with the EG Nu Gundam. Nope, am not getting another kit to make the EG Nu Gundam.

Continue reading

GCPBoleh S6 (rsvp.withgoogle.com)

Never know how to respond to learning rate, had learned from my mentor many years ago that its undeniable that when we reaches 30s or above the rate of learning new things will drop. Just know from experience using AWS, am able to blaze through 3 subjects that will take many hours.

In cloud computing, its hard to determine if one is an expert. Depth and breadth of Cloud services are too vast. Whenever, I hear a person confidently says he is an expert, red flags are raised.

From 3 days of the GCPBoleh season 6, which was introed by my staff, it is an interesting rediscovery that GCP is no longer a SaaS focused service. Unlike in the 2010s, AWS is the defacto leader for IaaS, MS Azure for the PaaS and GCP is definately SaaS competing with Heroku.

Other than relearning terminology used, how managed cloud services are implemented, and re-familiarization of the web console. I no longer see there is any difference between AWS, Azure and GCP.

Cloud computing are just our-sourcing most of IT Operations, and some middleware/framework to a 3rd party vendor. I do agree with my counterpart now that not everything can be cloudify.

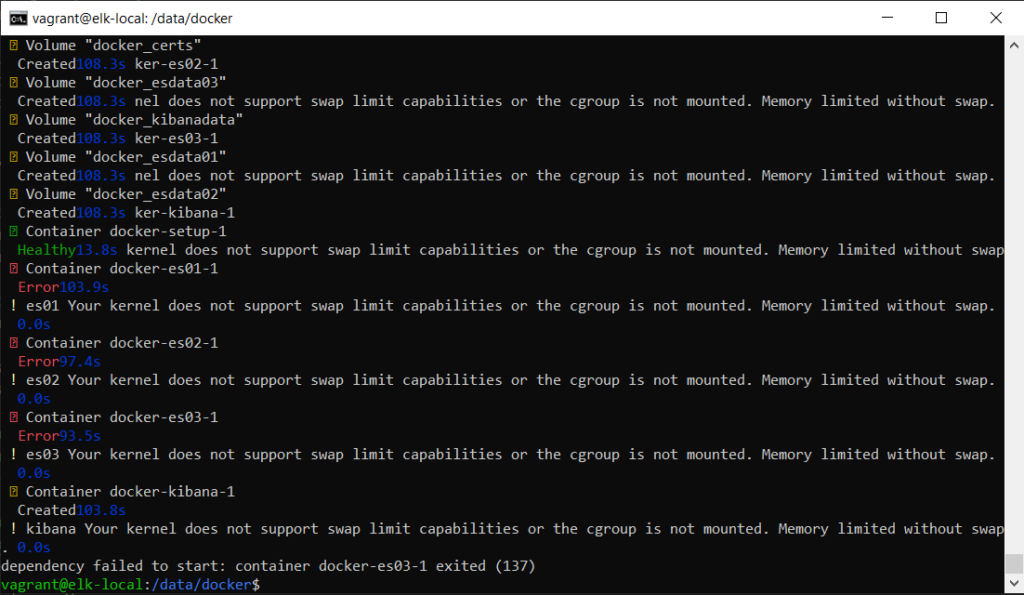

The first error that will be face on get go is the error “kibana Your kernel does not support swap limit capabilities or the cgroup is not mounted. Memory limited without swap” and exiting error “dependency failed to start: container docker-es03-1 exited (137)”.

Searching mentioned error on google or the internet will yield result that advice swap memory and memory limit hit.

Continue readingPre-requisite:

– VirtualBox 7.0.14

– Vagrant 2.4.1

– Windows 10 or better OS

– 16GB RAM (10GB RAM are required for creating ElasticSearch with kibana; 1GB and 2 x ElasticSearch node; 4GB each, rest of the RAM for VM host OS)

Overview:

There are 2 layers of virtualization, first the Virtual Box, then the docker engine running in the Virtual Box VM running on Ubuntu 20.04 focal.

Orchestration used in the host OS level; Windows 10 are the hashicorp vagrant. The vagrant is used to configure the VM Ubuntu OS to be configured to run properly configured docker and Ubuntu 20.04.

Then docker compose v2 are used to create the ElasticSearch 8.12 cluster or stack.

The downside of this example, vagrant up needs to be run initially to configure the VM Ubuntu 20.04 OS. I have yet to discover if Vagrant has the ability to bootstrap grub and configuring the sysctl to allow the docker engine to run properly with the ElasticSearch 8.12 stack.

Continue reading

Open shot so far offers video to be appended from car dashcam.

Unprocessed sum of diskspace of 6 hours worth of video is about 42GB.

Adding markers in order to mark significant timeline in the video to cut or edit or adding captions.

Making more sense to cut the combined 6 hours video from car dashcam which are multiple 1 minute clips.

Next, identify the starting point and the ending point of the videos to maintain anonymously.

Then, cut the 6 hours video clip into section of the travel to West Coast Expressway from the entry point, exit points, places of interests.

copy con is a legacy command that allows a plain MSDOS to create text file such as autoexec.bat and config.sys

From my experience, the command is supported from MSDOS 3.30 till today. Have yet to test this command in Windows 11 OS.

To commit and save the file into disk, use CTRL + Z

This command was learned/discovered during the days before existence of Internet, by attentively looking over the shoulder of seniors, who are not willing to teach during computer club session.

@echo off

netsh wlan show profiles |findstr All > tmp.txt

for /f "tokens=2 delims=:" %%a in (tmp.txt) do (

echo %%a

netsh wlan show profiles name=%%a key=clear

)

del tmp.txtThis batch script will list out list of saved wifi profiles, save the profile into text file tmp.txt

From the file tmp.txt, print out the 2nd token from the text file which will returns the WiFi profile name, then using the same command to expose WiFi key in clear text.

VF-1S Strike Valkyrie is the Macross movie : Do you remember love exclusive valkyrie.

Continue readingOne of the most badass 1980s Real Robot from Macross. The mecha was featured during the Miss Macross episode. The main character, Ichijō Hikaru were given a patrol duty while Miss Macross competition was in full swing. It was a turning point that things will never be the same between Ichijō and Minmei.